A curious guide to Entropy: Part 2 - Bits and Boltzmann Entropy

This is the second part of a three-part introductory series on exploring the idea of Entropy from its origins in Thermodynamics to applications today in Machine Learning. Part 1 one of the series can be found here.

Consider this series to be a three-course menu. In part one you had an appetizer, today you will feast through the main course, before closing with a delicious dessert in part three.

Ready?

Where we left off

“Maxwell's Daemon” was daemonic not so much because of her behavior as a particle bouncer, but because she traumatized mathematicians and physicists alike.

We ended the last article with that nagging feeling that Maxwell “swept something under the rug”. But what was it?

Big Idea 1: Information as Energy

It was not until 1929 that the Hungarian Mathematician Leo Szilard made the discovery that indeed suggested: Maxwell is wrong, entropy doesn’t simply decrease in “Maxwell’s Daemon” (the 2nd Law of Thermodynamics holds).

What Szilard found under the rug was information. More explicitly the act of acquiring information. Considering the daemon, all Maxwell thought of was her act of opening and closing the connecting door.

He was firmly footed in the physical world. To him, whatever happened in the mind of the daemon was not part of the physical system. It didn’t have relevance in terms of energy.

In terms of energy, Maxwell saw the physical interactions with the world and the mental activities of the daemon as completely separate.

But Szilard said: Not so fast. Actually, work is done here. The daemon is observing and measuring and this mental process requires energy. And it is exactly this energy that compensates for the loss of energy (entropy) that takes place in the system.

Historical sidemark: In order to make this distinction, Szilard introduced the idea of a “bit” of information, required to answer a yes/no (fast/slow) question. You might have heard the notion of a “bit” before. Now you know where it comes from.

As part of the main course, this was your side salad. Let us now come, bit by bit (😉), to the main course.

Big Idea 2: Boltzmann Entropy

Maxwell’s suggestion that the 2nd Law of Thermodynamics does not always hold was a major one. As you might imagine, when the foundations of a discipline are called into question (and as evident from Maxwell’s exploding Twitter account).

Maxwell was a scientist in a field that is known today as statistical mechanics. In statistical mechanics physical systems no longer have deterministic, predictable behavior but behave randomly and have probabilities associated with them.

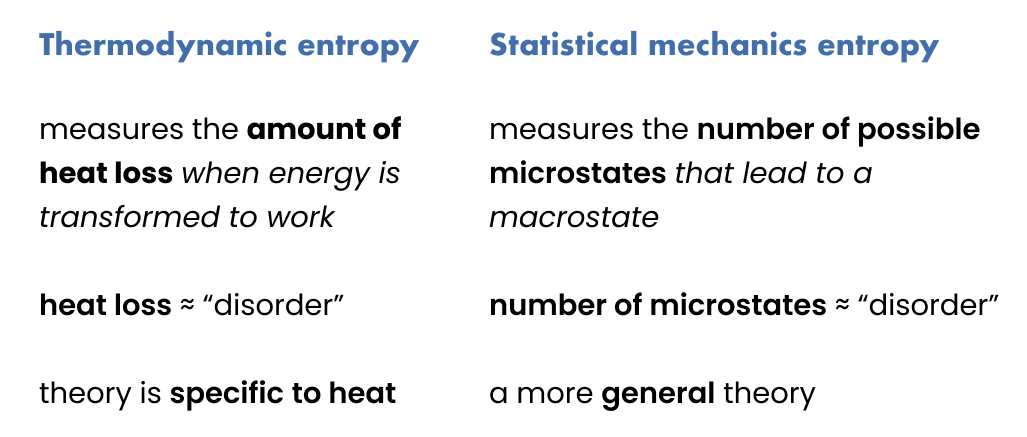

Along with Maxwell, Ludwig Boltzmann, an Austrian physicist who lived at the same time but was somewhat younger, was one of the most colorful figures in the field. It’s actually because of Boltzmann that entropy moved beyond its narrower interpretation in thermodynamics to become known as a more general “measure of disorder” (which was not bound to just thermodynamic systems anymore).

While thermodynamics was described as the “study of heat and thermal energy”, statistical mechanics is a more general mathematical framework that explains how macroscopic, “observable”, properties of a system – such as heat – arise from large quantities of microscopic components and their interactions – e.g. atoms or molecules.

This is getting a little bit involved so I would like to come back to the previous example of “Maxwell’s club”. Let’s call it “Maxhain” for a change (This article is written in Berlin, right?).

Well, anyways, let’s give our bouncer a day off and also, take out that damn door.

Figure 1: “Maxhain”

If you look at this image what you will see is a number of people, I mean particles, each with a certain velocity and position in the club. What you see in the image is called a microstate.

A microstate describes the position and velocities of all particles in the system at a single point in time. So, just to repeat that: One microstate describes the state of the whole system (in our case Maxhain with all particles their velocities and positions) at one point in time.

A microstate describes the configuration of a whole system at one point in time.

Imagine the blue particle in the corner right, let’s call it particle 67, changes position but the rest stays the same. Then this results in a completely new microstate.

Did you now that we generally tend to breath too shallow? Let’s actually take a breathing break together. Let’s breath in through the nose (and I mean it: do it), and then slightly longer out through the nose. I will wait here.

Great. Feels better, doesn’t it? Back to the main course. What we have now is a way to describe a system. But how do we get to entropy? And what does it all mean? There is still so much on the plate.

Well, what Boltzmann suggests is the following: Instead of looking at “heat loss” as disorder, we look at the number of microstates that correspond to a given macrostate.

We already talked about microstates, but now you possibly ask: what the hell are macrostates? And you are right: What are they?

A macrostate can simply be described as an “aggregated” behavior of the system that we are interested in. So in the case of the club, we could be interested in the macrostate of “VIP party”, i.e. the state where all slow people (particles) are on the left, and all the hot, “very important” people are on the right. Let’s look at it.

Figure 2: Maxhain in the macrostate “VIP Party”

Note: What you see in this image is one microstate. And now here comes the trick: It corresponds to the “aggregated”, higher-level, observable macrostate “VIP Party”.

And now it’s getting interesting. How do we move from this to actually measuring entropy? Luckily, it’s simpler than you might think. Let’s first recall the definition of entropy.

Entropy in statistical mechanics measures the number of possible microstates that lead to a macrostate.

So, if we have two macrostates (“VIP Party” and maybe let’s call the other one “Oktoberfest”), we can simply start counting the number of microstates corresponding to each macrostate.

Since we are still in a club, let’s assume the people can only move (or dance) in discrete steps but they are not able to make a ½ step or similar. First, dancing like this looks better and it also makes the math a little bit easier! ;-) (Which is also a good motivation)

Okay, time for another breath. You know the drill: in through the nose and then slightly longer out through the nose. Let’s do it!

Amazing. With fresh energy let’s get to the final piece on the plate.

Boltzmann defined entropy as follows:

S = k log W

It is also handily engraved on his tombstone in Vienna, in case you should forget it and would like to look it up.

Figure 3: Boltzmann really loved entropy (Photograph by Thomas Schneider)

Let’s unpack this:

S is simply entropy (of a given macrostate).

k is Boltzmann’s constant and (given the origin in thermodynamics) was measured in Joule per Kelvin.

log is the natural logarithm.

And W is the number of microstates corresponding to the macrostate.

Uff. Lots of stuff. But don’t despair, we will simplify this. At its core, the equation simply states:

S (entropy of macrostate) = W (no. of microstates)

The entropy of a macrostate is proportional to its corresponding number of microstates.

The k and the log are simply “scaling factors”. Since Boltzmann dealt with physical systems where you could often encounter enormous numbers of microstates, the Boltzmann constant (which is a very small decimal number) together with natural log simply “scale down” the entropy to make it more manageable.

To repeat, the primary thing that is happening here is to connect S with W. If you understand this, you are already at 90%.

An example

Let’s actually calculate the entropy for our two macrostates..

Remember, this is the equation:

S = k log W

Now let’s plug in the numbers.

Determine the number of microstates.

Let’s recall our two macrostates: VIP party (slow particles on the left, fast particles on the right) and Party of the people a.k.a Oktoberfest (all particles mixed up).

Figure 4: VIP party (left), Oktoberfest party (right)

I hope it makes intuitive sense that if a microstate should be counted as corresponding to the “VIP Party” macrostate, there are far less options simply because blue particles need to stay on the left and red particles need to stay on the right. When one of the particles crosses the room, it is the beginning of an Oktoberfest party (and people dancing on the tables).

What does that mean?

It means that we have way more possibilities to arrange particles inside the two rooms. Correspondingly, we have fewer possibilities if the particles for each group have to stay in their respective room.

Let’s suppose the following: There are 10,000 different ways to arrange our particles on the Oktoberfest party (i.e. 10,000 microstates) when people are allowed to cross rooms. But given there are less options and tables if everyone has to stay in their respective space, it’s just 1,000 different ways (i.e. 1,000 microstates) on the VIP party.

For matters of simplicity, we can also set k = 1. Since this is the Boltzmann constant it is probably not too happy about it, but let’s do it anyway. It is a scaling factor and doesn’t influence the general result.

We arrive at:

S = k log W

k = 1

S (“Oktoberfest”) = 1 * log 10,000 ≈ 9.21

S (“VIP Party”) = 1 * log 1,000 ≈ 6.91

Interpretation

If we just look at the numbers, you should be able to observe one thing: The more microstates correspond to a macrostate the higher the entropy, meaning the more likely that macrostate is.

And, finally, after all this work we can now make full sense of what Maxwell meant when he said that the 2nd Law of Thermodynamics is not a law at all but should rather be seen as a “statistical certainty”.

The disordered macrostate, i.e. the Oktoberfest, is on average, statistically, way more likely to emerge as time passes and no external force is applied. It’s not a guarantee. There is a theoretical possibility that we arrive at the ordered state (the VIP party), it’s just that it’s so much less probable that you can practically ignore it.

In turn, the 2nd law of Thermodynamics can be rewritten as follows: In an isolated system, the system will tend to progress to the most probable macrostate (which is the one with the most corresponding microstates).

Amazing. If you really stuck with me this whole article I just want to say how much I appreciate this and what an amazing, curious mind you have! This was by no means an easy article to read (and write)! 😁

Coming to the end, let’s take one final breath and reflect back on what we did today.

We learned about Szilard and the dirty secrets that Maxwell kept under the carpet (namely information).

We then looked at statistical mechanics as a more general mathematical framework to study physical systems.

And we learned about Boltzmann entropy as a measure of disorder and how this measure of entropy is less about “heat loss” but about probabilities of states.

Closing words

If you should take away one thing from this article I want it to be this: The notion of entropy has historically been changing. I struggled so much, I almost pulled my hair out, trying to make sense of what “disorder” meant when I first tried to connect thermodynamic entropy to Boltzmann entropy. I only relaxed when I started to accept that over time the definition of entropy changed, from a measure of heat loss in thermodynamics to a statistical measure that relates the likelihood of a macrostate to the number of microstates corresponding to it. There is no 1:1 connection here, it is simply a progression, a generalization of thought.

I hope this helps. It all makes sense.

With that, see you for part 3, I need to get back to the kitchen and start preparing the dessert! 🍮

This article was the second part of my series to introduce Entropy in a fun and entertaining way. If you are interested in more of my writing and related ideas to this and other topics, please subscribe to my newsletter.